GPT-NYC — Training an NLP model on local knowledge of New York City

When I recently chatted with members of a fiction-generation AI startup, one of my pre-written questions was whether a model could be trained for a specific non-fiction location. This is based on my idea for a model trained on the AskNYC subreddit.

- In the semantic search approach, we should just return the answers to a textually similar question.

- In a question-answering (QA) approach, this would be framed as a retrieval or knowledge graph problem where we give the model some authoritative data, and train it to retrieve appropriate and relevant answers.

- In a natural language generation (NLG) approach that I’ll use in this post, we don’t have one answer or source for queries like ‘what are the most romantic restaurants’, so a GPT or T5 architecture model can be fine-tuned for a NYC use-case.

A risk here is that results may sound right but not be factually true.

Challenge Questions

<written prior to training>

- How do I get to Governor’s Island? / Activities on Governor’s Island

- Where do you live and what is great about it?

- Is Bushwick good for families?

- How often do you go to Central Park? / Times Square?

- Where can I eat South Indian food such as dosa? / upma?

- Which is worst: the G train, LaGuardia, or JFK airport?

Getting the data

/r/AskNYC was created in August 2012. Locals’ Reddit activity is currently divided between /r/nyc and /r/newyorkcity, with community rules often redirecting questions from tourists and newer residents to /r/AskNYC. This is more typical of a state or country subreddit (/r/HawaiiVisitors and /r/JapanTravel) than a city (/r/chicago being a single location).

PushShift.io has monthly Reddit archives from January 2006 to December 2019 (from start of /r/AskNYC to before Covid-era questions).

I downloaded comments from January 2015 (32 GB unzipped JSON lines) and filtered with cat RC_2015–01 | grep 't5_2uqch' > asknyc.jsonl, leaving only 5,478 comments.

When I downloaded June 2019 (164 GB unzipped), that month has 19,824 AskReddit comments. I decided to include additional examples from other months: October 2016, August 2017, and April 2018.

I initially planned to scrape the original questions from Reddit, but found it easier to download and filter them also from PushShift.

Of 67,195 total collected comments, only 13,400 are direct responses to the parent question (in thread-speak: children and not grandchildren posts).

Considering Bias and Toxicity

We can anticipate dual issues of bias and toxicity in generated content. We want our NYC travel guide AI to be positive and knowledgable about many cultures and experiences of New Yorkers. It’s not enough to be polite if the model is limited to one borough, advises against trying Persian food, or describes religious practices as ‘strange’.

We can use upvote counts and initial moderation to help curate our comment dump. Admittedly /r/AskNYC is a public site with its own problems. Questions range from “Where do people buy matzah in NYC?” to “Why are all of the camera stores in NYC operated by Orthodox Jews?”, and “Homeless people in our basement” to “Any NYC’ers have experience helping the homeless sign up for their stimulus checks?”.

Any question about minority communities could be read as curious or as other-ing. People bring these questions to /r/AskNYC when they don’t know someone in their network who could answer, or when asking could be seen as confrontational, racist, or homophobic. Redditors tend to upvote succinct, personal experiences, and/or politically popular answers to these questions.

In Part 2, I plan to read into multiple papers to better regulate the output of the GPT-NYC model.

NLG (GPT-2) approach

Background

The GEM benchmark combines multiple language generation tasks. Two tasks include text constrained to a particular topic such as a restaurant. One of the examples is in Czech so I cannot evaluate it; the other uses data entries / coded input to generate sentences limited to a restaurant topic.

Preparing my Dataset

CoLab link

You can download the dataset here.

Finding Additional Tokens

CoLab link

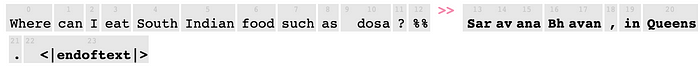

I would like to tokenize streets, neighborhoods, transit stations, people, foods, and events so we don’t drop NYC-specific information during training. I compare the text to tokenizers to figure out which common tokens were missing from standard GPT-2 and T5.

The top 200+ missing tokens include

touristy, gentrified, Midtown, nyc, blizzard, blotter, Bushwick, Adjudication, bellboy, oncoming, dumpling, bagel, deli, halal, strollerAlong with many other places and street numbers.

This doesn’t mean that GPT-2 is blind to all of these words — if every occurrence of stroller has been baked into GPT’s stroll and +er tokens, then it might be learned (try prompting it though…) Missing ‘bagel’, ‘burrito’, ‘dumpling’, and ‘halal’ is going to under-serve a lot of New Yorkers, Americans, and people globally.

I decided to add this list, subway stations, 1st to 220th, neighborhoods, and the foods from The world’s 50 best foods and 99 things to eat that will blow your mind (not NYC-specific, but best lists I could with both dosa and pho).

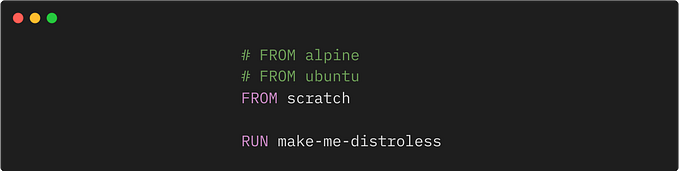

When I first placed the vocabulary, these new tokens are selected nonsensically, so we need to continue training:

Fine-tuning GPT2

I started by fine-tuning GPT2 [small] on Google CoLab.

I was pleasantly surprised with results which were generally coherent, and sometimes funny, even if short on facts.

I was motivated to continue my work on a larger model.

I also redesigned the prompts to the format: “question — additional info %% comment”. This avoids a particular mode where the question and additional info are continued by the model.

The GPT2-Medium model was too big to fine-tune on the CoLab GPU, so I needed to seek out an A100 GPU with >16GB of on-device memory. It takes about 2 hours to train for 5 epochs. There were shortages of this machine type in the US, so I ended up running this command in an EU cloud.

gcloud compute instances create Sample --project Project --zone europe-west4-a --machine-type a2-highgpu-1g --image-family pytorch-1-6-cu110 --image-project deeplearning-platform-release --boot-disk-size 100GB --metadata "install-nvidia-driver=True,proxy-mode=project_editors" --scopes https://www.googleapis.com/auth/cloud-platform --maintenance-policy TERMINATEResults

- How do I get to Governor’s Island?

- Where do you live and what is great about it?

- Is Bushwick good for families / parties?

- How often do you go to Central Park / Times Square?

- Where can I eat South Indian food such as dosa?

This is actually accurate!

- Which is worst: the G train, LaGuardia, or JFK airport?

I’m happy with these answers, though they seem to suffer from memorization, and over-interest in the new vocabulary. A more rigorous probe of responses might reveal that instead of answering the original question, you are prompting a handful of responses: riffing on apartments, riffing on food, dismissing the question (such as ‘no’ or ‘anywhere’), or the memorized URLs and responses.

Demo

You can try it yourself with the HuggingFace hosted inference API widget.

Stick to the formula: question — info %%

https://huggingface.co/monsoon-nlp/gpt-nyc?text=What+food+should+I+try+in+the+Bronx%3F+%25%25